Rasterization

Rasterization is a technique that produces an image as seen from a single viewpoint. This is what GPUs use from the start. As modern GPUs became stronger and stronger, rasterization allowed for real-time graphics like the ones we see in games. Moreover, the increase in GPU power eventually led to 3D gaming as we know it nowadays.

Ray Tracing

In a few words, Ray Tracing (RT) creates more realistic scenes by accurately showing lighting. It doesn’t only show what is visible from a single point of view, but it shows what is visible from many different points of view in many different directions. It works by shooting rays into a scene from the camera’s viewpoint. These rays interact with the scene’s objects, and the effects of these interactions have to be shown accurately, which demands heavy computing power to show the correct ray paths and the corresponding accents. If this wasn’t enough, the first interaction of a ray hitting an object and the ray’s reflections on other objects must also be calculated for a more realistic outcome. In real life, shots of rays affect the entire scene. The same applies to graphics, where all pixel information must be considered for a highly realistic result. This is extremely demanding in real-time, even with today’s hardware. Nvidia and AMD introduced various techniques for real-time ray tracing, minimizing the computational power required for realistic scenes.

Path Tracing

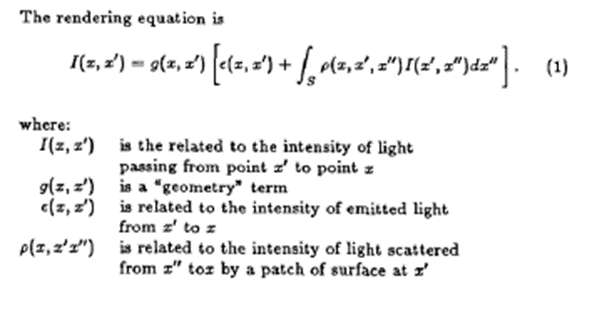

Path tracing is a method of modeling real-time dynamic lighting in a scene, and it is not new at all since it originated in the mid-80s. In 1986, CalTech professor Jim Kajiya’s paper “The Rendering Equation” connected computer graphics with physics through ray tracing and introduced the path-tracing algorithm, making it possible to represent how light scatters throughout a scene accurately.

In this method, many rays are shot in a scene, and the algorithm has to track them as they interact with the scene’s object. The critical factor that lowers computing power demands is that instead of monitoring every single ray at the pixel level, it only tracks a portion of these rays, trying to stay as close as it gets to reality. To put it differently, in path tracing, the algorithm determines which reflections are the most important to provide an accurate close-up of a real-life scene and throws out the ones that are not so important. Needless to say, though, the more ray samples the algorithm takes into account, the better the visual result, but it comes at an increased computing power cost.

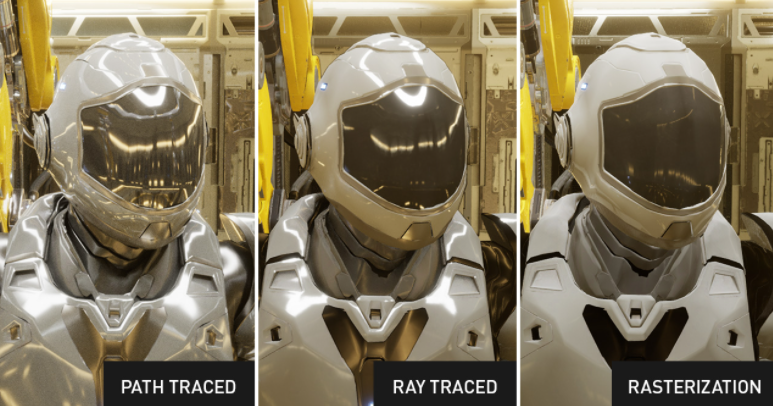

Since path tracing results cannot be perfect, they are combined with denoising algorithms to provide a cleaner image. The results are astonishing, as you can see in the screenshot below. To read more about path tracing, you should look at this article, written by an NVIDIA employee.

Combining Rasterization, Ray Tracing & Path Tracing!

Rasterization casts one set of rays from a single point, which vanishes when they hit the first object. Ray tracing casts rays from many points in any direction, and finally, path tracing tries to truly simulate lighting physics, having ray tracing as one of its components.

DLSS 3.5

Nvidia initially created DLSS Super Resolution to utilize a convolutional autoencoder AI model and take the low-resolution current frame and the high-resolution previous frame to reconstruct a high-resolution current frame on a pixel-by-pixel basis. So, DLSS trains the AI model to predict an ultra-high-resolution reference image. The difference between the predicted and reference image is used to train the neural network. This process is repeated tens of thousands of times until the network can predict a high-quality image.

NVIDIA DLSS 3.5 is a suite of AI rendering technologies powered by Tensor Cores on GeForce RTX GPUs for faster frame rates, better image quality, and increased responsiveness. DLSS includes Super Resolution & DLAA (available for all RTX GPUs), Frame Generation (RTX 40 Series GPUs), and Ray Reconstruction (available for all RTX GPUs). The latter enhances image quality using AI to generate additional pixels for intensive ray-traced scenes.

The primary difference between DLSS 3 and 3.5 is that while the former was limited to RTX 40-series GPUS, the latter can boost ray tracing on all RTX-series GPUs. In other words, the DLSS 3 frame generation technique uses existing frames and deep learning to estimate how a new frame should look so it can add a frame between two native frames. In DLSS 3.5, ray tracing performance is improved thanks to deep learning (5x more data than DLSS 3), where the GPU is trained and learns how a scene should look to provide better results. Raytracing elements are shown better in DLSS 3.5, including reflections.

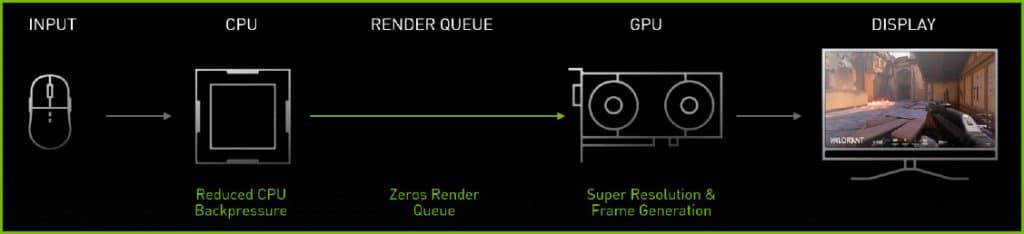

Reflex

The Reflex SDK allows game developers to reduce game latency by up to 2x. It works by aligning game engine work to complete just in time for rendering, eliminating the GPU render queue, and reducing CPU back pressure in GPU-bound scenarios. The result is lower latency, improved responsiveness, and aiming. NVIDIA Reflex is automatically enabled with DLSS 3 Frame Generation turned on.

Reflex dynamically reduces latency by combining both GPU and game optimizations:

• In GPU-bound cases, the Reflex SDK enables the CPU to submit rendering work to the GPU just before it finishes the prior frame—significantly reducing and often eliminating the render queue. This allows for better response times and increased aiming precision. Reflex also reduces back pressure on the CPU, enabling games to sample mouse input at the last possible moment. While similar to the driver’s Ultra Low Latency mode technology, it’s superior according to NVIDIA since the technique used to control CPU render submission occurs directly from within the game engine.

• The Reflex SDK also boosts GPU clocks and allows frames to be submitted to the display slightly sooner in some heavily CPU-bound cases.