In the high-stakes race for AI supremacy, Nvidia is urging its memory suppliers to accelerate the development of next-generation HBM4 memory. According to industry analysts, the chip giant is seeking speeds beyond official standards to maintain its performance lead, specifically as its competitor, AMD, prepares to launch its rival MI450 platform in 2026.

The move highlights the critical role of memory bandwidth in powering advanced artificial intelligence models and the intense competition between the two tech giants.

The Need for Speed: 10 Gbps or Bust

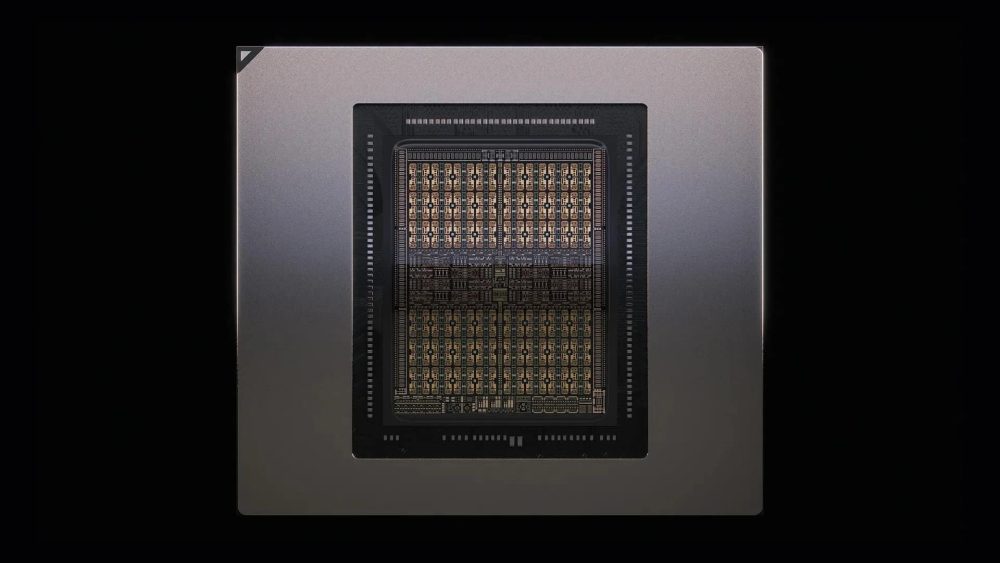

The official standard for HBM4, set by the JEDEC committee, is 8 gigabits per second (Gbps) per data pin. However, TrendForce reports that Nvidia has requested its partners achieve 10 Gbps for its upcoming “Vera Rubin” AI GPU platform.

This speed boost is significant. At 10 Gbps, a single stack of HBM4 memory would deliver 2.56 terabytes per second of bandwidth. With multiple stacks on a single GPU, Nvidia could achieve staggering total bandwidth, which is essential for handling the massive datasets used in AI training and inference.

The AMD Factor

Nvidia’s push is widely seen as a direct response to AMD’s impending MI450 “Helios” AI accelerator, which is expected to be released in 2026. AMD’s new architecture is designed to be highly competitive, and by securing faster memory, Nvidia aims to blunt AMD’s performance advantages before they even hit the market. This preemptive strike underscores the strategic importance of being first to market with superior specifications.

The Supply Chain Challenge

While demanding higher performance, Nvidia’s primary concern remains a stable supply. The production of HBM4 is a complex process led by three major players: SK hynix, Samsung, and Micron.

- SK hynix: Currently Nvidia’s largest HBM supplier, is expected to retain its leading position into 2026 due to its established partnerships and production capacity.

- Samsung is taking a more aggressive approach by using an advanced 4nm manufacturing process for the HBM4 “base die,” which could give it a technical edge in achieving the coveted 10 Gbps speed.

- Micron is also developing HBM4 but has been less specific about its high-speed capabilities.

Achieving 10 Gbps is not guaranteed. Pushing for higher speeds can lead to increased power consumption, heat, and manufacturing complexity. Suppose the 10 Gbps target proves too challenging or expensive. In that case, analysts suggest Nvidia may create a two-tier product lineup: using the fastest memory for its top-tier “Rubin CPX” data center racks and slower, more readily available HBM4 for standard configurations.

The Bottom Line

The battle for AI hardware dominance is increasingly fought in the memory stack. Nvidia’s attempt to secure a speed advantage for its 2026 platforms demonstrates that it is not taking the AMD threat lightly. However, this strategy comes with risks, potentially making Nvidia more dependent on the success of a specific supplier and a specific, unproven memory technology.

The outcome of this behind-the-scenes effort will significantly influence the performance and availability of the AI systems that power the next generation of artificial intelligence applications. For now, the industry watches to see if memory makers can deliver on Nvidia’s ambitious request.