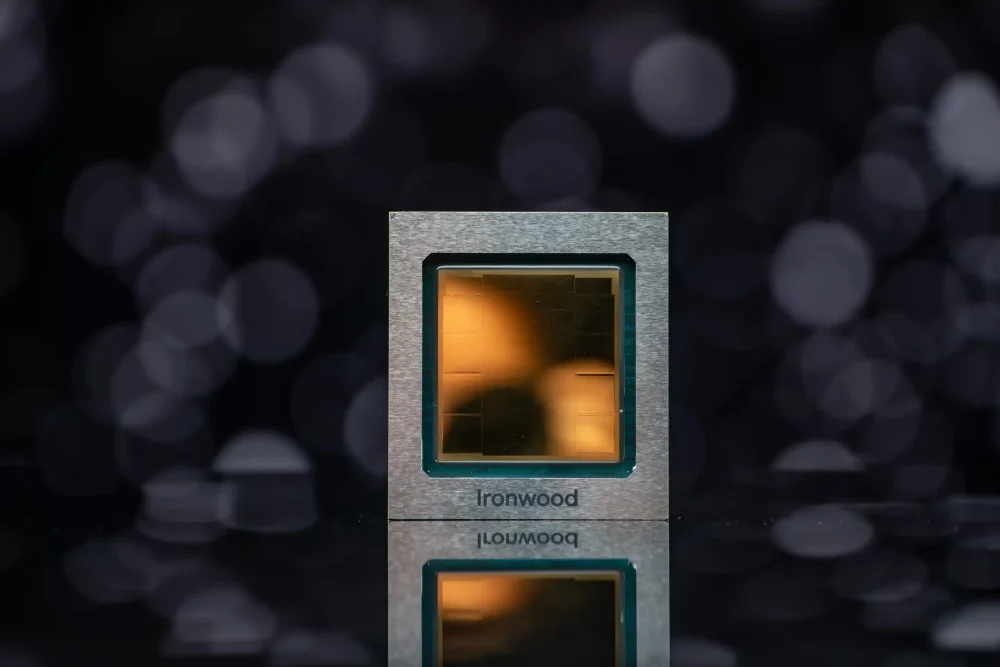

Move over, El Capitan — there’s a new sheriff in Silicon Town, and it answers to Ironwood.

Unveiled at Google Cloud Next ’25, Google’s seventh-generation TPU isn’t just fast — it’s obliterating benchmarks. When scaled across a pod of 9,216 chips, Ironwood delivers a jaw-dropping 42.5 exaflops of AI muscle. That’s 24x faster than the world’s current fastest supercomputer. Yes, you read that right. Twenty. Four. Times.

But the real game-changer? This beast wasn’t built to train those already-massive AI models. It’s purpose-built for inference — the day-to-day workhorse that answers our questions, writes our emails, and generates our AI-powered selfies. We’re entering what Google calls the “Age of Inference”, where AI doesn’t just wait for commands. It thinks ahead, collaborates with other agents, and proactively generates insights. Think less “calculator,” more “digital coworker.”

⚙️ Under the Hood: Ironwood’s Unholy Specs

-

4,614 teraflops per chip

-

192GB HBM per chip (6x Trillium’s memory)

-

7.2 Tbps memory bandwidth

-

2x performance-per-watt over Trillium

-

30x power efficiency over Google’s first TPU

You can do all this without burning down your data center. In an era where energy is the bottleneck, that kind of efficiency is a power play—literally.

🧩 Why It Matters: The Shift from Training to Reasoning

Inference isn’t sexy… until you realize it runs billions of times a day across your favorite apps. Google knows the economics of AI are shifting. The model’s already trained — it’s about deploying them faster, cheaper, and smarter.

Enter Gemini 2.5. Built on Ironwood, this new generation of models isn’t just responding. It’s reasoning, planning, and even simulating complex problem-solving. And with variants like Gemini 2.5 Flash, Google is optimizing responsiveness for everything from financial forecasts to real-time customer support.

This is where the actual intelligence arms race begins — not in building the biggest brain, but in teaching it how to think faster and burn fewer calories.

🌐 The Bigger Picture: A New Kind of AI Stack

Ironwood is just one chess piece in Google’s strategy. Add:

-

Cloud WAN for enterprise networking dominance

-

Pathways for ML workload scaling

-

Agent Development Kit (ADK) for creating systems of collaborating AI agents

-

A2A (Agent-to-Agent Interoperability Protocol) for standardizing multi-agent communication

Suddenly, Google isn’t just selling chips — it’s selling the nervous system for tomorrow’s AI ecosystem.

And that’s not all. With over 400 enterprise AI success stories lined up, Google is making it crystal clear: this isn’t sci-fi — it’s market-ready infrastructure, and it’s coming for the enterprise wallet.

🥊 The Coming War: Google vs Microsoft vs Amazon

Let’s not kid ourselves — the cloud chip war just escalated into a silicon arms race.

-

Microsoft has OpenAI and Azure-powered everything.

-

Amazon is pushing Trainium and Inferentia like it’s Prime Day every day.

-

Google just dropped Ironwood, with full-stack control from silicon to software.

What’s wild? Google is balancing proprietary TPU advantages with open standards for agent interoperability. It’s like building the roads and owning the racecars — and letting others drive, as long as they pay the toll.

This could reshape the entire AI industry, especially if agent interoperability (A2A) catches on. And if Microsoft and AWS don’t counter quickly, they might find themselves stuck in the training lane while Google zooms into the inference-driven fast future.

🔮 This Isn’t Just a Chip. It’s a Chapter in AI History.

Google’s Ironwood isn’t just a flex of power. It’s a flag planted in the ground, declaring that the AI battlefield is shifting—from brute-force training to real-time reasoning, from data centers to multi-agent collaboration, from walled gardens to ecosystem dominance.

Inference is the new frontier.

And Ironwood? It’s the engine that’s going to drive it.