Flash-accelerated AI memory delivers an industry-first large-model training and boosted inference to integrated GPUs

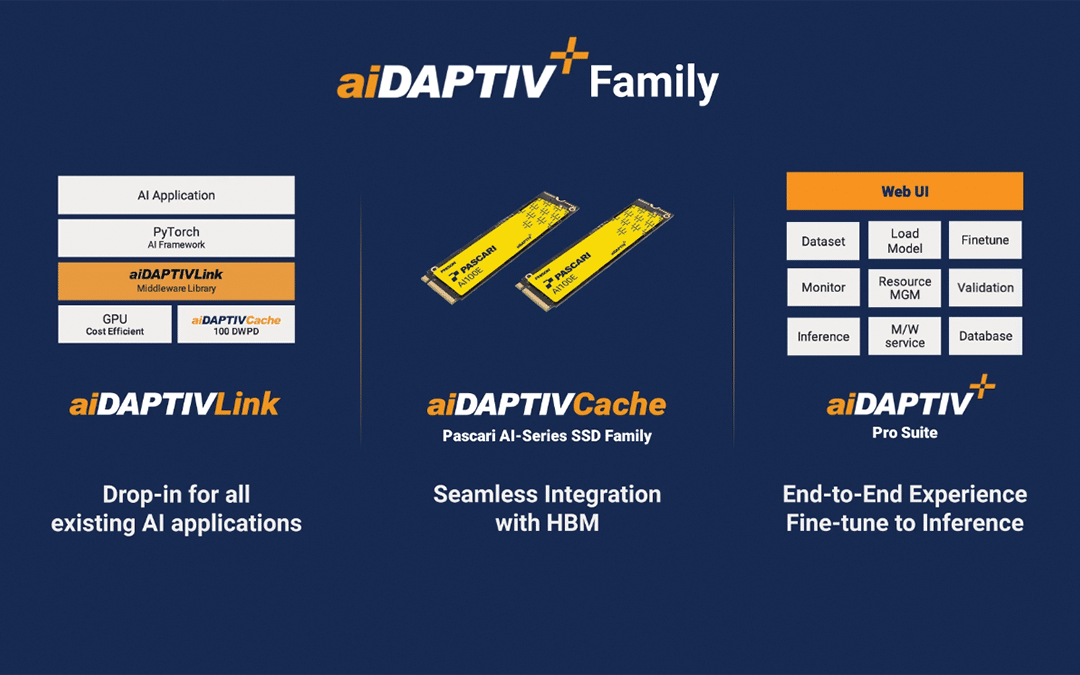

Las Vegas, NV — CES 2026 — January 6, 2026 — Phison Electronics (8299TT), a global leader in NAND flash controllers and storage solutions, today announced expanded capabilities for its aiDAPTIV+ technology, extending powerful AI processing to integrated GPU architectures. Built on Phison’s 25 years of flash memory expertise, the expanded aiDAPTIV+ architecture now accelerates inference, significantly increases memory capacity, and simplifies deployment, unlocking large-model AI capabilities on notebook PCs, desktop PCs, and mini-PCs.

As organizations confront unprecedented data volumes and increasingly complex AI training and inference workloads, demand is rising for solutions that are both accessible and affordable on everyday devices. aiDAPTIV+ addresses these and market memory shortage challenges by utilizing NAND flash as memory to remove compute bottlenecks, enabling on-premises inferencing and fine-tuning of large models on ubiquitous platforms.

Today’s announcement showcases the expanding innovations between Phison and its strategic partners, including unlocking larger LLM training on Acer laptops with significantly less DRAM. This enables users to run AI workloads on smaller platforms that meet the requirements for data privacy, scalability, affordability, and ease of use. For OEMs, resellers, and system integrators, this technology also supports end-to-end solutions that overcome traditional GPU VRAM limitations.

“As AI models grow into tens and hundreds of billions of parameters, the industry keeps hitting the same wall with GPU memory limitations,” said Michael Wu, President and GM, Phison US. “By expanding GPU memory with high-capacity, flash-based architecture in aiDAPTIV+, we offer everyone, from consumers and SMBs to large enterprises, the ability to train and run large-scale models on affordable hardware. In effect, we are turning everyday devices into supercomputers.”

“Our engineering collaboration enables Phison’s aiDAPTIV+ technology to accommodate and accelerate large models such as gpt-oss-120b on an Acer laptop with just 32GB of memory,” said Mark Yang, AVP, Compute Software Technology at Acer. “This can significantly enhance the user experience interacting with on-device Agentic AI, for actions ranging from simple search to intelligent inquiries that support productivity and creativity.”

aiDAPTIV+ technology and partner solutions will be showcased at the Phison Bellagio Suite and partner booths during CES from January 6-8, 2026, including support for:

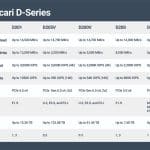

Reduced TCO and Memory Consumption

For Mixture of Experts (MoE) inference, aiDAPTIV+ offloads memory demands from DRAM to a cost-effective flash-based cache. In Phison testing, a 120B parameter can now be handled with 32GB of DRAM, in contrast to the 96GB required in traditional approaches.1 This expands the ability to do MoE processing to a broader range of platforms.

Faster Inference Performance

By storing tokens that no longer fit in the KV cache during inference, aiDAPTIV+ makes them reusable for future prompts rather than recalculating them. Based on Phison testing, this accelerates inference response times by 10x and reduces power consumption. Early aiDAPTIV+ inference testing on notebook PCs shows substantial responsiveness gains, delivering noticeable improvement on Time to First Token (TTFT). These results demonstrate the significant inference acceleration achievable on notebook platforms.

Bigger Data on Smaller Devices

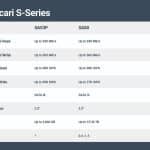

Combining Phison’s aiDAPTIV+ and new Intel Core Ultra Series 3 processors with built-in Intel Arc GPUs enables larger LLMs to be trained directly on notebook PCs, addressing industry demand for high-performance AI workflows utilizing iGPUs.Phison’s lab testing shows that a notebook equipped with this technology can fine-tune a 70B-parameter model. A model of that size previously required engineering workstations or data center servers, which cost up to 10 times as much. Now, students, developers, researchers, and organizations can access far greater AI capabilities on familiar notebook platforms at a lower cost.

At CES 2026, Phison is showcasing demonstrations of partner notebooks, desktops, mini-PCs, and personal AI supercomputers running integrated processors with aiDAPTIV+, including Acer, Corsair, MSI, and NVIDIA. Phison partner MSI will showcase both an AI notebook and a desktop PC in their booth, utilizing aiDAPTIV+ to accelerate inference performance in an online application that summarizes meeting notes. Additional partners, ASUS and Emdoor, will be demonstrating notebook and desktop computers leveraging aiDAPTIV+ in their booths.