AMD’s latest AI chips go toe-to-toe with Blackwell, with performance parity, memory supremacy, and a 30% discount that Wall Street can’t ignore.

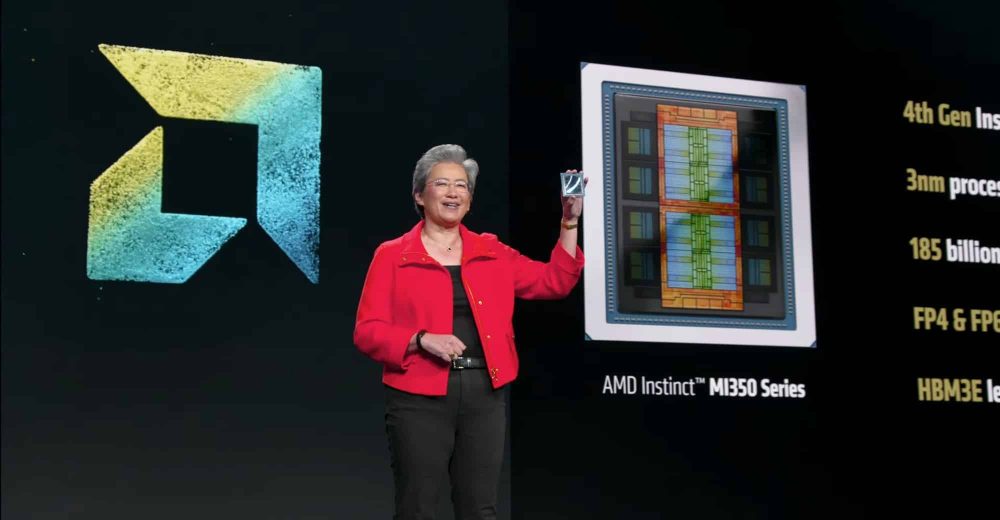

It’s been a long time coming, but it finally happened: Advanced Micro Devices has launched a shot across the bow that NVIDIA might actually need to duck. In a bold new report, HSBC analysts declared that AMD’s new Instinct MI350 series, especially the MI355X model, is on par with NVIDIA’s mighty Blackwell B200 GPUs in performance—and in some ways, even better.

For years, NVIDIA has been the uncontested heavyweight in AI silicon. CUDA, Tensor Cores, rack-scale dominance—you name it, Jensen Huang had it locked down. But AMD, under Lisa Su’s relentlessly focused leadership, has now engineered a real contender, with memory bandwidth so high it borders on the obscene and pricing so aggressive it could actually rewrite the hyperscaler procurement playbook.

What HSBC Saw That Made Them Shout “Buy!”

In a note that doubled AMD’s price target to $200 and upgraded the stock to a “Buy,” HSBC pointed to two key advantages:

- Memory Bandwidth That Breaks Bottlenecks:

The MI355X delivers a mind-bending 22.1 terabytes per second (TB/s) of memory bandwidth. That’s nearly 3x the Blackwell B200’s 8 TB/s. And when you’re training giant foundation models with tens or hundreds of billions of parameters, bandwidth is where performance lives or dies. - Pricing With Teeth:

AMD’s MI350 series carries an average selling price of $25,000, which comes with a ~30% discount compared to NVIDIA’s hardware. Even if NVIDIA still holds the edge in raw TFLOPs—5,000 sparse versus AMD’s 3,000–4,000 dense—the value proposition is undeniably compelling.

Market Dynamics: NVIDIA’s AI Fortress Now Has a Crack

Let’s be clear: NVIDIA still dominates. It commands over 90% of the discrete GPU market. Its CUDA ecosystem boasts over 5 million developers, and it offers rack-scale deployments (like its 72-GPU DGX SuperPods) that AMD still can’t match.

But HSBC sees a crucial shift brewing:

- $15.1 Billion in AI Revenue by 2026: That’s a 57% beat over consensus for AMD, thanks to rising adoption.

- Just a 10% market share gain for AMD would cost NVIDIA $5 billion annually.

- Higher ASPs = Higher Leverage: AMD’s ability to charge $25,000 for its latest chips could add $3 billion to its top line.

That’s not “catching up.” That’s counterpunching with real muscle.

A Bigger Gamble: MI400 and the Vera Rubin War

If MI350 was the warning shot, MI400 is the artillery shell. Revealed last month in a joint appearance by AMD CEO Lisa Su and OpenAI’s Sam Altman (a surprise customer), MI400 is aimed squarely at NVIDIA’s Vera Rubin platform, due in late 2026.

HSBC’s Frank Lee and his analyst team suggest MI400 could accelerate AMD’s AI ambitions even further, assuming ROCm (AMD’s AI software stack) continues to improve. And let’s face it: software is still AMD’s weakest link. CUDA is a cathedral; ROCm is still building its scaffolding.

A Real Fight at the Top of the Silicon Food Chain

For the first time in years, NVIDIA’s AI dominance isn’t a foregone conclusion. AMD has put real hardware on the table, signed major clients, and convinced serious investors that it’s no longer just a CPU company trying to play in the big leagues. It’s a real AI rival, one sparse FLOP away from taking market share with conviction.