Rasterization

Rasterization is a technique that produces an image as seen from a single viewpoint. This is what GPUs use from the start. As modern GPUs became stronger and stronger, rasterization allowed for real-time graphics like the ones we see in games. Moreover, the increase in GPU power eventually led to 3D gaming as we know it nowadays.

Ray Tracing

In a few words, Ray Tracing (RT) creates more realistic scenes by accurately showing lighting. It doesn’t only show what is visible from a single point of view, but it shows what is visible from many different points of view in many different directions. It works by shooting rays into a scene from the camera’s viewpoint. These rays interact with the scene’s objects, and the effects of these interactions have to be shown accurately, which demands heavy computing power to show the correct ray paths and the corresponding accents. If this wasn’t enough, the first interaction of a ray hitting an object and the ray’s reflections on other objects must also be calculated for a more realistic outcome. In real life, shots of rays affect the entire scene. The same applies to graphics, where all pixel information must be considered for a highly realistic result. This is extremely demanding in real-time, even with today’s hardware. Nvidia and AMD introduced various techniques for real-time ray tracing, minimizing the computational power required for realistic scenes.

Path Tracing

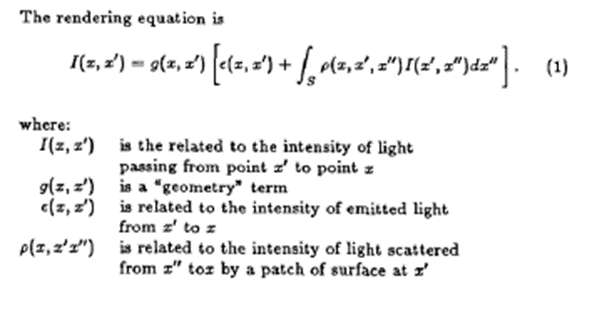

Path tracing is a method of modeling real-time dynamic lighting in a scene, and it is not new at all since it originated in the mid-80s. In 1986, CalTech professor Jim Kajiya’s paper “The Rendering Equation” connected computer graphics with physics through ray tracing and introduced the path-tracing algorithm, making it possible to represent how light scatters throughout a scene accurately.

In this method, many rays are shot in a scene, and the algorithm has to track them as they interact with the scene’s object. The critical factor that lowers computing power demands is that instead of monitoring every single ray at the pixel level, it only tracks a portion of these rays, trying to stay as close as it gets to reality. To put it differently, in path tracing, the algorithm determines which reflections are the most important to provide an accurate close-up of a real-life scene and throws out the ones that are not so important. Needless to say, though, the more ray samples the algorithm takes into account, the better the visual result, but it comes at an increased computing power cost.

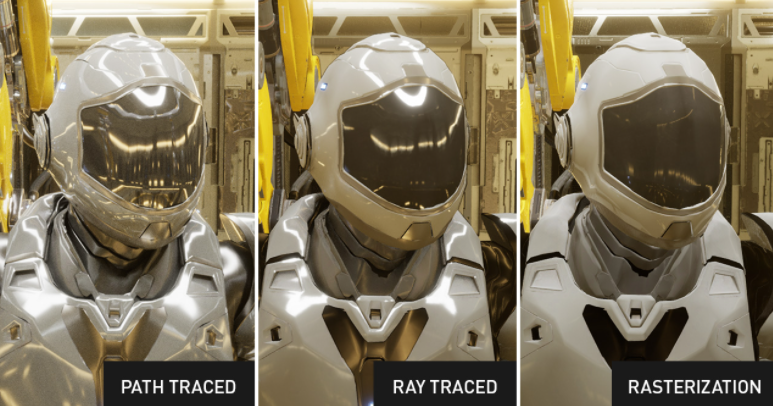

Since path tracing results cannot be perfect, they are combined with denoising algorithms to provide a cleaner image. The results are astonishing, as you can see in the screenshot below. To read more about path tracing, you should look at this article, written by an NVIDIA employee.

Combining Rasterization, Ray Tracing & Path Tracing!

Rasterization casts one set of rays from a single point, which vanishes when they hit the first object. Ray tracing casts rays from many points in any direction, and finally, path tracing tries to truly simulate lighting physics, having ray tracing as one of its components.

DLSS 4.0

Nvidia initially created DLSS Super Resolution to utilize a convolutional autoencoder AI model and reconstruct a high-resolution current frame on a pixel-by-pixel basis from the low-resolution current frame and the high-resolution previous frame. So, DLSS trains the AI model to predict an ultra-high-resolution reference image. The difference between the predicted and reference image is used to train the neural network. This process is repeated thousands of times until the network can predict a high-quality image.

DLSS Ray Reconstruction, DLSS Super Resolution, and DLAA will now be powered by the graphics industry’s first real-time application of ‘transformers,’ the same advanced architecture powering frontier AI models like ChatGPT, Flux, and Gemini. DLSS transformer models improve image quality with improved temporal stability, less ghosting, and greater detail in motion.

NVIDIA DLSS is a suite of AI rendering technologies powered by Tensor Cores on GeForce RTX GPUs for faster frame rates, better image quality, and increased responsiveness. DLSS includes Super Resolution & DLAA (available for all RTX GPUs), Frame Generation (RTX 40/50 Series GPUs), and Ray Reconstruction (available for all RTX GPUs).

(Multi) Frame Generation

The significant difference between the frame generation on the RTX 40 and 50 series is that the latter will allow the generation of up to three frames, offering a way higher performance boost.

DLSS Multi Frame Generation generates up to three additional frames per traditionally rendered frame, working in unison with the complete suite of DLSS technologies to multiply frame rates by up to 8X over traditional brute-force rendering. According to NVIDIA, this performance improvement allows for 4K 240 FPS fully ray-traced gaming on the RTX 5090.

In the initial DLSS frame generation, introduced in the RT 40 series, an optical flow accelerator provides to the DLSS algorithm the required data to generate a frame using a neural network from information retrieved from the previously rendered frame, so the final result is double the frame rates (aka fps performance). In multi-frame generation, AI takes over the optical flow generator’s place, predicting up to three frames from an actually rendered frame, so in essence, we get three more frames, instead of only one, from an actual one.

Suppose the rendered frame is produced through Super Resolution and we have set the frame generation to the maximum performance, generating four times the pixels from a single rendered pixel. In that case, we can have Frame Pacing problems. What is Frame Pacing? Let’s check that below:

Assume that this is the proper sequence of frames displayed in 1/6th of a second in a 30 fps game with proper frame pacing:

1 – 1 – 2 – 2 – 3 – 3 – 4 – 4 – 5 – 5

Each frame (1-5) is repeated once, so there is a smooth motion.

Now, in a game with frame pacing issues across the same period, you can get something like this:

1 – 1 – 2 – 2 – 2 – 3 – 4 – 5 – 5 – 5

In this example, the third frame takes longer to render, so the second frame has to repeat and frames 3 and 4 don’t remain as long, with frame 5 repeated three times. This results in a choppy, not smooth, game feeling.

DLSS4 uses dedicated hardware to address frame pacing, which is included in Blackwell’s Display Engine hardware and reduces frame variability by 5 to 10 times.

What NVIDIA has to say About Flip Metering and Measuring 1% Lows

- What is flip metering, and how is it used with DLSS Multi Frame Generation?

Frame Generation relies on evenly paced frames for smooth performance. Traditional CPU-based pacing can introduce variability, affecting smoothness. With the release of Blackwell, NVIDIA has upgraded DLSS Frame Generation and Multi Frame Generation to use Flip Metering instead of CPU-based pacing, enabling the display engine for 40 and 50-Series GPUs to control display timing precisely. Blackwell architecture was built with enhanced hardware flip metering capabilities to provide the speed and accuracy best suited for a smooth, high-quality experience across games and modes.

- Why did NVIDIA decide to use MsBetweenDisplayChange to calculate 1% Lows and FPS instead of alternate methods (MsBetweenPresents/CPU Busy & Wait times)?

MsBetweenDisplayChange is the best metric for calculating FPS and 1% Lows because it aligns more closely with the end-user experience, capturing all delays that affect how frames are delivered to the display.

Standard methods for measuring frame times in other tools measure when the CPU hands off frames to the GPU. This basis for FPS metrics leads to slight inaccuracies, as frames experience variable delays from the downstream rendering pipeline that affect how long frames are actually displayed. And in the case of DLSS Frame Generation and Multi Frame Generation with flip metering, any method of frame time measurement besides MsBetweenDisplayChange will be highly inaccurate. Other tools that measure frametimes early in the pipeline will report unpaced frames of DLSS. At the same time, gamers will experience perfectly paced frames via flip-metering, which occurs right before scan out. MsBetweenDisplayChange occurs late enough to accurately capture how frames are paced to screen, reflecting what gamers will see when they use DLSS’s enhanced Frame Generation and Multi Frame Generation.

The New Transformer Model

Previously, DLSS used Convolutional Neural Networks (CNNs) to generate new pixels by analyzing localized context and tracking changes in those regions over successive frames. After six years of continuous improvements, we’ve reached the limits of what’s possible with the DLSS CNN architecture.

The new DLSS transformer model uses a vision transformer, enabling self-attention operations to evaluate the relative importance of each pixel across the entire frame and over multiple frames. Employing double the parameters of the CNN model to achieve a deeper understanding of scenes, the new model generates pixels that offer greater stability, reduced ghosting, greater detail in motion, and smoother edges in a scene.

According to NVIDIA, in intensive ray-traced content, the new transformer model for Ray Reconstruction delivers a significant uplift in image quality, especially in scenes with challenging lighting conditions. For example, in these scenes from Alan Wake 2, stability is increased on the highly-detailed chainlink fence, ghosting on the fan blades is reduced, and shimmering is eliminated on the power lines, improving the player’s immersive experience in the third-person game.

RTX Neural Shaders

RTX Neural Shaders bring AI to programmable shaders

Twenty-five years ago, NVIDIA introduced GeForce and programmable shaders, unlocking new chapters in graphics technology, from pixel shading to compute shading to real-time ray tracing. NVIDIA is now introducing RTX Neural Shaders, which bring small neural networks into programmable shaders, enabling graphics capabilities. The applications of neural shading are vast, including radiance caching, texture compression, materials, radiance fields, and more.

The RTX Neural Shaders SDK enables developers to train their game data and shader code on an RTX AI PC, accelerate their neural representations, and model weights with NVIDIA Tensor Cores at runtime. During training, neural game data is compared to the traditional data output and refined over multiple cycles. Developers can simplify the training process with Slang, a shading language that splits large, complex functions into smaller pieces that are easier to handle.

Train your game data and shader code and accelerate inference with NVIDIA Tensor Cores

This technology is used for three applications: RTX Neural Texture Compression, RTX Neural Materials, and Neural Radiance Cache (NRC).

- RTX Neural Texture Compression uses AI to compress thousands of textures in less than a minute. Their neural representations are stored or accessed in real-time or loaded directly into memory without further modification. The neurally compressed textures save up to 7x more VRAM or system memory than traditional block-compressed textures at the same visual quality.

- RTX Neural Materials uses AI to compress complex shader code typically reserved for offline materials and built with multiple layers such as porcelain and silk. The material processing is up to 5x faster, making it possible to render film-quality assets at game-ready frame rates.

- RTX Neural Radiance Cache is a neural shader that uses neural networks trained on live game data to estimate indirect lighting for a game scene more accurately and performantly. NRC partially traces 1-2 rays before storing them in a radiance cache and infers an infinite amount of rays and bounces for a more accurate representation of indirect lighting in the game scene. Not only is path traced indirect lighting improved, but performance increases as fewer rays need to be traced. NRC is now available through the RTX Global Illumination SDK and will be available in Portal with RTX and, in the coming months, RTX Remix.

Reflex 2

In 2020, NVIDIA released Reflex, a technology that reduces PC latency in top competitive games by an average of 50%. NVIDIA Reflex accomplishes this by synchronizing CPU and GPU work, so player actions are reflected in-game quicker, giving gamers a competitive edge in multiplayer games and making single-player titles more responsive.

NVIDIA Reflex has been integrated into over 100 games in the last four years. According to NVIDIA over 90% of gamers turn Reflex on, allowing them to experience better responsiveness, aim more accurately, and win more games.

NVIDIA Reflex 2 can reduce PC latency by up to 75%. Reflex 2 combines Reflex Low Latency mode with a new Frame Warp technology, further reducing latency by updating the rendered game frame based on the latest mouse input before sending it to the display.

- Prologue & Technical specifications

- NVIDIA’s Key Technologies

- Box & Contents

- Part Analysis

- Specifications Comparison

- Test System

- Game Benchmark Details

- Raster Performance

- RT Performance

- RT Performance + DLSS/FSR Balanced

- Raytracing Performance + DLSS/FSR Balanced + FG

- DLSS/FSR Balanced (No RT)

- DLSS/FSR Balanced + FG (No RT)

- Relative Perf & Perf Per Watt (Raster)

- Relative Perf & Perf Per Watt (Raster + DLSS/FSR)

- Relative Perf & Perf Per Watt (RT)

- Relative Perf & Perf Per Watt (RT + DLSS/FSR)

- Relative Perf & Perf Per Watt (RT + DLSS/FSR + FG)

- Rendering Performance

- Operating Temperatures

- Operating Noise & Frequency Analysis

- Power Consumption

- Clock Speeds & Overclocking

- Cooling Performance

- Epilogue

Aris for 5090 what should i’ve consider FSP MEGA TI 1350 or FSP Hydro PTM Pro 3.1 1350, with the MEGA TI one should have Pre Ordered for 1 month ?

I am expecting some Mega Ti’s for my review benches. Once I run them for some time I will be able to tell you how they perform in the long run 🙂 Although 1350W are more than ok for a 5090