Chinese researchers have unveiled a new class of photonic (light-based) AI chips that they claim can outperform NVIDIA’s market-leading GPUs by more than 100 times in speed and energy efficiency, at least for specific generative AI workloads such as image synthesis and video generation.

The most advanced of these chips, called LightGen, was developed by a joint team from Shanghai Jiao Tong University and Tsinghua University, with results published in Science. Another system, ACCEL, was developed at Tsinghua University and combines photonic and analog electronic components.

The claims are striking, but they come with important caveats.

These chips are not general-purpose processors, and they are not replacements for NVIDIA GPUs like the A100 or H100. Instead, they represent a fundamentally different computing architecture optimized for narrow, predefined AI tasks, particularly in computer vision and generative image workloads.

Electrons vs. Photons

Modern GPUs rely on electrons flowing through transistors to execute digital instructions step by step. This makes them incredibly flexible: they can train models, run software, handle memory-intensive workloads, and support a wide range of applications.

The trade-off is power and heat. High-end GPUs consume enormous amounts of energy, generate significant heat, and require cutting-edge manufacturing processes.

Photonic chips take a different path.

Instead of electrons, they use photons, light itself, to perform calculations through optical interference. Because light moves faster and produces far less heat, these systems can achieve extreme speed and energy efficiency for certain mathematical operations.

Think of it this way:

- NVIDIA GPUs are programmable supercomputers

- Photonic AI chips are purpose-built analog machines

They are astonishingly fast at what they are designed to do—but they can’t do everything.

ACCEL: Hybrid Photonic Power

The ACCEL chip is a hybrid design combining photonic components with analog electronics. Importantly, it can be manufactured using older fabrication processes, such as those available at China’s SMIC, rather than the latest cutting-edge nodes.

ACCEL reportedly delivers 4.6 petaFLOPS of performance while consuming extremely little power. However, those FLOPS come from pre-defined analog operations, not from programmable code execution or memory-intensive tasks.

That makes ACCEL ideal for applications like:

- Image recognition

- Low-light vision

- Optical signal processing

But unsuitable for:

- Running software

- Training large AI models

- Replacing CPUs or GPUs in general computing

LightGen: Fully Optical Generative AI

The more ambitious project, LightGen, goes further.

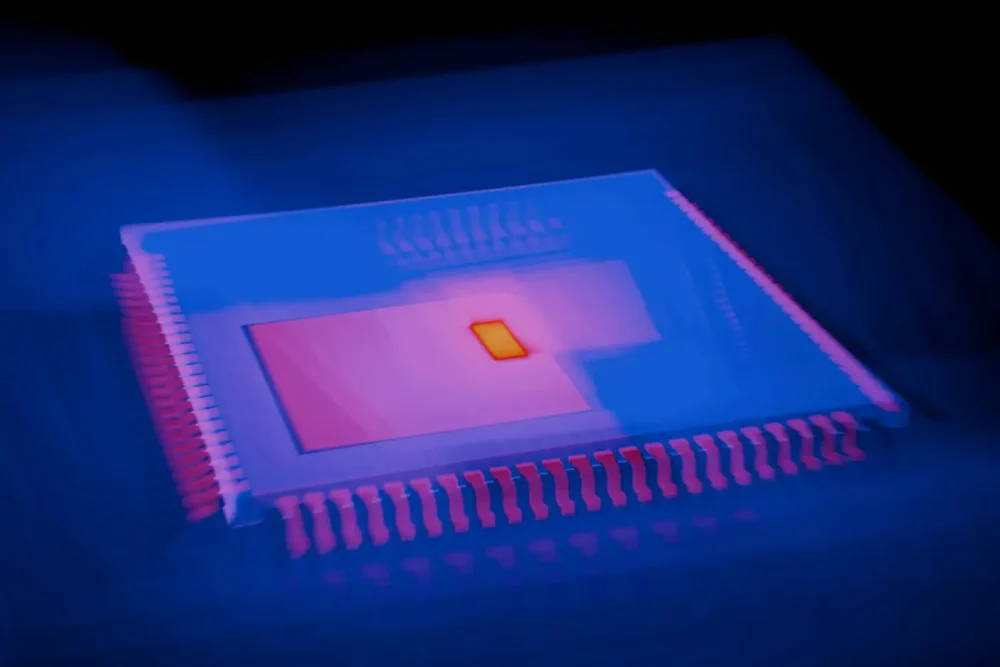

LightGen is a fully optical AI chip integrating over 2 million photonic “neurons” on a compact die measuring 136.5 mm². According to the research team, the chip can perform:

- High-resolution image generation (up to 512×512 pixels)

- Image denoising

- Style transfer

- 3D image generation and manipulation

- Video-related generative tasks

At a conservative estimate, LightGen achieved:

- 35,700 TOPS (tera operations per second)

- 664 TOPS per watt of energy efficiency

In these tightly constrained tasks, its end-to-end performance exceeded that of NVIDIA’s A100 GPU by more than 100×, both in speed and energy efficiency, while generating outputs of comparable quality.

Why This Doesn’t Threaten NVIDIA

Despite the dramatic numbers, LightGen doesn’t put NVIDIA’s business at risk—at least not today.

The reason is simple: flexibility matters.

LightGen cannot:

- Run arbitrary programs

- Handle large, dynamic memory workloads

- Train AI models

- Replace GPUs in data centers or consumer devices

Its power comes from doing one class of operations extremely well, not from being adaptable.

In other words, LightGen shows that photonic computing can perform real generative AI, but only within carefully controlled domains.

As the researchers themselves acknowledge, this is a new computing paradigm, not a GPU competitor.

Importance

What makes LightGen and ACCEL important is not market disruption, but direction.

As AI workloads explode, energy consumption has become one of the industry’s biggest constraints. Photonic AI offers a credible path toward:

- Dramatically lower power consumption

- Faster inference for vision-heavy workloads

- Specialized AI accelerators that offload work from GPUs

The LightGen team also introduced new ideas, including:

- An “optical latent space” architecture for efficient data compression

- A novel unsupervised training algorithm that reduces dependence on massive labeled datasets

Together, these advances suggest that photonic computing could eventually become a core platform for specific AI tasks, rather than a laboratory curiosity.

Bottom Line

Chinese researchers have demonstrated that light-based AI hardware can outperform GPUs by orders of magnitude, but only when the problem is narrowly defined.

NVIDIA won’t lose sleep over LightGen. But data centers of the future may quietly rely on photonic accelerators to handle specialized workloads, saving power, reducing heat, and letting GPUs focus on what they do best. This isn’t the end of electronic computing. It’s the beginning of hybrid AI architectures, where electrons and photons each play their part.