A recent study by Apple researchers has raised new questions about the nature of artificial intelligence and substantial language models (LLMs) like ChatGPT. According to their findings, LLMs lack logical reasoning despite giving intelligent responses. The study, published on the arXiv preprint server, suggests that these AI models create an illusion of intelligence.

As AI has advanced rapidly in recent years, models like ChatGPT and others have impressed users with seemingly intelligent responses, leading some to wonder if they possess actual understanding. Apple’s researchers tackled this question by testing several LLMs to determine whether they could grasp the nuances of everyday situations that require logical thought.

For example, if a child asks a parent how many apples are in a bag while also noting that some are too small to eat, both the child and parent understand that the size of the apples is irrelevant to the number. This ability to separate pertinent from irrelevant information is a basic form of logical reasoning.

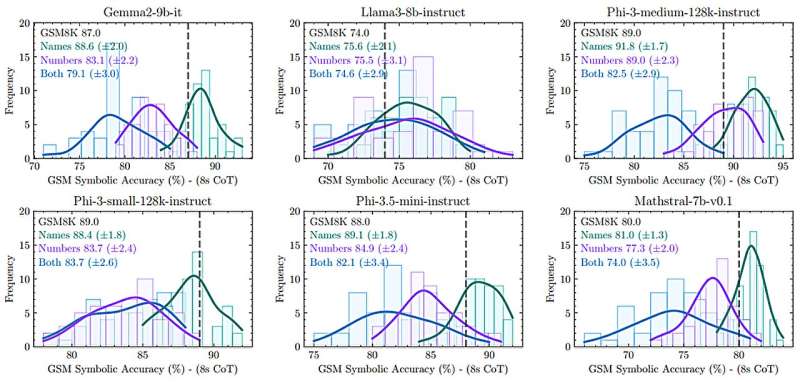

The Apple team devised a series of tests for multiple LLMs, adding non-pertinent information to the questions. Surprisingly, this extra detail was enough to trip up the AI models, leading them to give incorrect or nonsensical answers to questions they had previously answered correctly. According to the researchers, this shows that LLMs need help to understand what they are being asked. Instead, they rely on recognizing patterns in the structure of sentences and then generating a response based on patterns learned through machine learning algorithms.

Moreover, the researchers found that LLMs often provide answers that seem right at first glance but fall apart upon closer inspection. For example, when asked how they “feel” about something, some LLMs respond in a way that suggests they can experience emotions—despite this being impossible for AI.

ChatGPT’s Thoughts on the Matter

This study touches on a critical aspect of the current AI landscape: while AI is impressive, it’s far from truly “intelligent.” The models are exceptionally good at mimicking conversation and performing tasks based on massive data sets. However, they still need to learn to think logically as humans do. Instead of genuine understanding, these models rely on statistical associations between words and phrases to produce what seems like thoughtful responses.

This doesn’t mean AI isn’t helpful—far from it. However, it highlights the limitations that exist when it comes to contextual understanding and logical thought. As AI develops, the challenge will be finding ways to bridge the gap between pattern recognition and proper comprehension. For now, AI remains a powerful tool that requires careful oversight and interpretation by human users.

Source: techxplore.com